The Existence of the Exponential Function: Difference between revisions

(→The "Duflo Homomorphism" Equation: Remove triple quote which causes strange bold-effects in the table of contents.) |

|||

| (15 intermediate revisions by one other user not shown) | |||

| Line 11: | Line 11: | ||

{{Equation|Init|<math>e(x)=1+x+</math>''(higher order terms)''.}} |

{{Equation|Init|<math>e(x)=1+x+</math>''(higher order terms)''.}} |

||

Alternative proofs of the existence of <math>e(x)</math> are of course available, including the explicit formula <math>e(x)=\sum_{k=0}^\infty\frac{x^k}{k!}</math>. Thus the value of this paperlet is not in the result it proves but rather in the '''allegorical story''' it tells: that there is a technique to solve functional equations such as {{EqRef|Main}} using homology. There are plenty of other examples for the use of that technique, in which the equation replacing {{EqRef|Main}} isn't as easy. Thus the exponential function seems to be the easiest illustration of a general principle and as such it is worthy of documenting. |

Alternative proofs of the existence of <math>e(x)</math> are of course available, including the explicit formula <math>e(x)=\sum_{k=0}^\infty\frac{x^k}{k!}</math>. Thus the value of this paperlet is not in the result it proves but rather in the '''allegorical story''' it tells: that there is a technique to solve functional equations such as {{EqRef|Main}} using homology. There are plenty of other examples for the use of that technique, in which the equation replacing {{EqRef|Main}} isn't as easy (see [[#Further Examples|Further Examples]] below). Thus the exponential function seems to be the easiest illustration of a general principle and as such it is worthy of documenting. |

||

Thus below we will pretend not to know the exponential function and/or its relationship with the differential equation <math>e'=e</math>. |

Thus below we will pretend not to know the exponential function and/or its relationship with the differential equation <math>e'=e</math>. |

||

| Line 17: | Line 17: | ||

===Acknowledgment=== |

===Acknowledgment=== |

||

Significant parts of this paperlet were contributed by '''Omar Antolin Camarena'''. |

Significant parts of this paperlet were contributed by '''Omar Antolin Camarena'''. Further thanks to Yael Karshon, to Peter Lee and to the students of [[06-1350|Math 1350]] (2006) in general. |

||

== |

==The Scheme== |

||

We aim to construct <math>e(x)</math> and solve {{EqRef|Main}} inductively, degree by degree. Equation {{EqRef|Init}} gives <math>e(x)</math> in degrees 0 and 1, and the given formula for <math>e(x)</math> indeed solves {{EqRef|Main}} in degrees 0 and 1. So booting the induction is no problem. Now assume we've found a degree 7 polynomial <math>e_7(x)</math> which solves {{EqRef|Main}} up to and including degree 7, but at this stage of the construction, it may well fail to solve {{EqRef|Main}} in degree 8. Thus modulo degrees 9 and up, we have |

|||

Before getting into our main point, which is merely to solve the equation <math>e(x+y)=e(x)e(y)</math>, let us briefly list a number of other places in mathematics were similar "non-linear algebraic functional equations" need to be solved. The techniques we will develop to solve {{EqRef|Main}} can be applied in all of those cases, though sometimes it is fully successful and sometimes something breaks down somewhere along the line. It is completely safe to skip this section if you are not interested in further examples. |

|||

{{Equation|M|<math>e_7(x+y)-e_7(x)e_7(y)=M(x,y)</math>,}} |

|||

{{Begin Side Note|35%}} In knot theory solving this equations is as easy as calculating 1+1=2 on an abacus: |

|||

[[Image:One Plus One on an Abacus.png|center|250px]] |

|||

where <math>M(x,y)</math> is the "mistake for <math>e_7</math>", a certain homogeneous polynomial of degree 8 in the variables <math>x</math> and <math>y</math>. |

|||

Also see {{ref|Bar-Natan_Le_Thurston_03}}. |

|||

Our hope is to "fix" the mistake <math>M</math> by replacing <math>e_7(x)</math> with <math>e_8(x)=e_7(x)+\epsilon(x)</math>, where <math>\epsilon(x)</math> is a degree 8 "correction", a homogeneous polynomial of degree 8 in <math>x</math> (well, in this simple case, just a multiple of <math>x^8</math>). |

|||

{{Begin Side Note|35%}}*1 The terms containing no <math>\epsilon</math>'s make a copy of the left hand side of {{EqRef|M}}. The terms linear in <math>\epsilon</math> are <math>\epsilon(x+y)</math>, <math>-e_7(x)\epsilon(y)</math> and <math>-\epsilon(x)e_7(y)</math>. Note that since the constant term of <math>e_7</math> is 1 and since we only care about degree 8, the last two terms can be replaced by <math>-\epsilon(y)</math> and <math>-\epsilon(x)</math>, respectively. Finally, we don't even need to look at terms higher than linear in <math>\epsilon</math>, for these have degree 16 or more, high in the stratosphere. |

|||

{{End Side Note}} |

{{End Side Note}} |

||

So we substitute <math>e_8(x)=e_7(x)+\epsilon(x)</math> into <math>e(x+y)-e(x)e(y)</math> (a version of {{EqRef|Main}}), expand, and consider only the low degree terms - those below and including degree 8:<sup>*1</sup> |

|||

'''The "Duflo Homomorphism" Equation''' is the equation |

|||

{{Equation*|<math>( |

{{Equation*|<math>e_8(x+y)-e_8(x)e_8(y)=M(x,y)-\epsilon(y)+\epsilon(x+y)-\epsilon(x)</math>.}} |

||

We define a "differential" <math>d:{\mathbb Q}[x]\to{\mathbb Q}[x,y]</math> by <math>(df)(x,y)=f(y)-f(x+y)+f(x)</math>, and the above equation becomes |

|||

written in <math>\left(S(L^\star)\otimes S(L^\star)\otimes U(L)\right)^L</math>, for an unknown <math>\Upsilon\in S(L^\star)\otimes U(L)</math> where <math>L</math> is a Lie algebra. With appropriate qualifications, <math>S(L^\star)\otimes U(L)=\operatorname{Hom}(S(L),U(L))</math>, and our equation becomes the statement "<math>\Upsilon</math> is an algebra homomorphism between the invariants of <math>S(L)</math> and the invariants of <math>U(L)</math>", and its solution is the so-called "Duflo homomorphism". This is a typical "'''homomorphism wanted'''" equation and it it has many relatives, including our primary example <math>e(x+y)=e(x)e(y)</math> which is also known as the statement "the additive group of <math>{\mathbb R}</math> is isomorphic to the multiplicative group of <math>{\mathbb R}_+</math>. Note that in general, the equation for being a homomorphism, say <math>\varphi(xy)=\varphi(x)\varphi(y)</math>, is non-linear in the homomorphism <math>\phi</math> itself. |

|||

{{Equation*|<math>e_8(x+y)-e_8(x)e_8(y)=M(x,y)-(d\epsilon)(x,y)</math>.}} |

|||

'''The "Formal Quantization" Equation''' is the equation |

|||

{{Begin Side Note|35%}}*2 It is worth noting that in some a priori sense the existence a solution of <math>e(x+y)=e(x)e(y)</math> is unexpected. For <math>e</math> must be an element of the relatively small space <math>{\mathbb Q}[[x]]</math> of power series in one variable, but the equation it is required to satisfy lives in the much bigger space <math>{\mathbb Q}[[x,y]]</math> of power series in two variables. Thus in some sense we have more equations than unknowns and a solution is unlikely. How fortunate we are that exponentials do exist, after all! |

|||

{{Equation*|<math>\star(\star(f,g),h) = \star(f,\star(g,h)),</math>}} |

|||

{{End Side Note}} |

|||

To continue with our inductive construction we need to have that <math>e_8(x+y)-e_8(x)e_8(y)=0</math>. Hence the existence of the exponential function hinges upon our ability to find an <math>\epsilon</math> for which <math>M=d\epsilon</math>. In other words, we must show that <math>M</math> is in the image of <math>d</math>. This appears hopeless unless we learn more about <math>M</math>, for the domain space of <math>d</math> is much smaller than its target space and thus <math>d</math> cannot be surjective, and if <math>M</math> was in any sense "random", we simply wouldn't be able to find our correction term <math>\epsilon</math>.<sup>*2</sup> |

|||

As we shall see momentarily by "finding syzygies", <math>\epsilon</math> and <math>M</math> fit within the 1st and 2nd chain groups of a rather short complex |

|||

written for an unknown "<math>\star</math>-product", within a certain non-commutative space of "potential products" which resembles <math>\operatorname{Hom}(V\otimes V,V)</math> or <math>V^\star\otimes V^\star\otimes V</math> for some vector space <math>V</math>. This is a typical "'''algebraic structure wanted'''" equation, in which the unknown is an "algebraic structure" (an associative product <math>\star</math>, in this case) and the equation is "the structure satisfies a law" (the associative law, in our case). Note that algebraic laws are often non-linear in the structure that they govern (the example relevant here is that the associative law is "quadratic as a function of the product"). Other examples abound, with the "structure" replaced by a "bracket" or anything else, and the "law" replaced by "Jacobi's equation" or whatever you fancy. |

|||

{{Equation|Complex|<math>\left(\epsilon\in C^1={\mathbb Q}[[x]]\right)\longrightarrow\left(M\in C^2={\mathbb Q}[[x,y]]\right)\longrightarrow\left(C^3={\mathbb Q}[[x,y,z]]\right)</math>,}} |

|||

'''The Drinfel'd Pentagon Equation''' is the equation |

|||

whose first differential was already written and whose second differential is given by <math>(d^2m)(x,y,z)=m(y,z)-m(x+y,z)+m(x,y+z)-m(x,y)</math> for any <math>m\in{\mathbb Q}[[x,y]]</math>. We shall further see that for "our" <math>M</math>, we have <math>d^2M=0</math>. Therefore in order to show that <math>M</math> is in the image of <math>d^1</math>, it suffices to show that the kernel of <math>d^2</math> is equal to the image of <math>d^1</math>, or simply that <math>H^2=0</math>. |

|||

{{Equation*|<math>\Phi(t^{12},t^{23}) \Phi(t^{12}+t^{23},t^{24}+t^{34}) \Phi(t^{23},t^{34}) = \Phi(t^{13}+t^{23},t^{34}) \Phi(t^{12}, t^{24}+t^{34})</math>.}} |

|||

==Finding a Syzygy== |

|||

It is an equation written in some strange non-commutative algebra <math>{\mathcal A}^h_4</math>, for and unknown "function" <math>\Phi(a,b)</math> which in itself lives in some non-commutative algebra <math>{\mathcal A}^h_3</math>. This equation is related to tensor categories, to quasi-Hopf algebras and (strange as it may seem) to knot theory and is commonly summarized with either of the following two pictures: |

|||

[[Image: |

[[Image:A Syzygy for Exponentiation.png|320px|right]] |

||

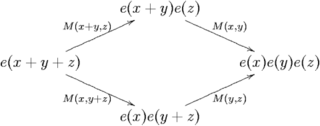

So what kind of relations can we get for <math>M</math>? Well, it measures how close <math>e_7</math> is to turning sums into products, so we can look for preservation of properties that both addition and multiplication have. For example, they're both commutative, so we should have <math>M(x,y)=M(y,x)</math>, and indeed this is obvious from the definition. Now let's try associativity, that is, let's compute <math>e_7(x+y+z)</math> associating first as <math>(x+y)+z</math> and then as <math>x+(y+z)</math>. In the first way we get |

|||

{{Equation*|<math>e_7(x+y+z) = M(x+y,z)+e_7(x+y)e_7(z) = M(x+y,z)+\left(M(x,y)+e_7(x)e_7(y)\right)e_7(z)</math>.}} |

|||

This equation is a close friend of the '''Drinfel'd Hexagon Equation''', and you can read more about both of them at {{ref|Drinfeld_90}}, {{ref|Drinfeld_91}} and {{ref|Bar-Natan_97}}. These equations have many relatives living in many further exotic spaces. |

|||

In the second we get |

|||

'''The Drinfel'd Twist Equation''' is the equation |

|||

{{Equation*|<math> |

{{Equation*|<math>e_7(x+y+z) = M(x,y+z)+e_7(x)e_7(y+z) = M(x+y,z)+e_7(x)\left(M(y,z)+e_7(y)e_7(z)\right)</math>.}} |

||

Comparing these two we get an interesting relation for <math>M</math>: <math>M(x+y,z)+M(x,y)e_7(z) = M(x,y+z) + e_7(x)M(y,z) </math>. Since we'll only use <math>M</math> to find the next highest term, we can be sloppy about all but the first term of <math>M</math>. This means that in the relation we just found we can replace <math>e_7</math> by its constant term, namely 1. Upon rearranging, we get the relation promised for <math>M</math>: <math> d^2M = M(y,z)-M(x+y,z)+M(x,y+z)-M(x,y) = 0</math>. |

|||

This equation is written in another strange non-commutative algebra <math>{\mathcal A}_3</math>, which is a close cousin of <math>{\mathcal A}^h_3</math>. The unknown <math>F</math> lives in the <math>{\mathcal A}_2</math>, and <math>\Phi</math> and <math>\Phi'</math> are solutions of the Drinfel'd pentagons of before. You can read more about this equation at {{ref|Drinfeld_90}}, {{ref|Drinfeld_91}} and {{ref|Le_Murakami_96}}. This equation has many relatives living in many further exotic spaces. |

|||

==Computing the Homology, Easy but Limited== |

|||

[[Image:06-1350-R4.svg|200px|right]] |

|||

'''The Braidor Equation''' is the equation |

|||

Now let's prove that <math>H^2=0</math> for our (piece of) chain complex. That is, letting <math>M(x,y) \in \mathbb{Q}[[x,y]]</math> be such that <math>d^2M=0</math>, we'll prove that for some <math>\epsilon(x) \in \mathbb{Q}[[x]]</math> we have <math> d^1\epsilon = M </math>. |

|||

{{Equation*|<math>B(x_1,x_2,x_3) B(x_1+x_3,x_2,x_4) B(x_1,x_3,x_4)</math><math>=</math><math>B(x_1+x_2,x_3,x_4) B(x_1,x_2,x_4) B(x_1+x_4,x_2,x_3)</math>,}} |

|||

Write the two power series as <math> \epsilon(x) = \sum{\frac{\alpha_i}{i!}x^i} </math> and <math> M(x,y) = \sum{\frac{m_{ij}}{i!j!}x^i y^j} </math>, where the <math>\alpha_i</math> are the unknowns we wish to solve for. |

|||

written in <math>{\mathcal A}_4</math> for an unknown <math>B\in{\mathcal A}_3</math>. It is related to the picture on the right and through it to the third Reidemeister move of braid theory and knot theory. At present, the only place to read more about it is [[06-1350/Homework Assignment 4]]. |

|||

The coefficient of <math>x^i y^j z^k</math> in <math> M(y,z)-M(x+y,z)+M(x,y+z)-M(x,y)</math> is |

|||

==The Scheme== |

|||

{{Equation*|<math> \frac{\delta_{i0} m_{jk}}{j!k!} - {{i+j} \choose i} \frac{m_{i+j,k}}{(i+j)!k!} + {{j+k} \choose j} \frac{m_{i,j+k}}{i!(j+k)!} - \frac{\delta_{k0} m_{ij}}{i!j!}</math>.}} |

|||

We aim to construct <math>e(x)</math> and solve {{EqRef|Main}} inductively, degree by degree. Equation {{EqRef|Init}} gives <math>e(x)</math> in degrees 0 and 1, and the given formula for <math>e(x)</math> indeed solves {{EqRef|Main}} in degrees 0 and 1. So booting the induction is no problem. Now assume we've found a degree 7 polynomial <math>e_7(x)</math> which solves {{EqRef|Main}} up to and including degree 7, but at this stage of the construction, it may well fail to solve {{EqRef|Main}} in degree 8. Thus modulo degrees 9 and up, we have |

|||

Here, <math> \delta_{i0} </math> is a Kronecker delta: 1 if <math> i=0 </math> and 0 otherwise. Since <math> d^2 M = 0 </math>, this coefficient is zero. Multiplying by <math> i!j!k! </math> (and noting that, for example, the first term doesn't need an <math> i! </math> since the delta is only nonzero when <math> i=0 </math>) we get: |

|||

{{Equation|M|<math>e_7(x+y)-e_7(x)e_7(y)=M(x,y)</math>,}} |

|||

{{Equation|m|<math> \delta_{i0} m_{jk} - m_{i+j,k} + m_{i,j+k} + \delta_{k0} m_{ij}=0</math>.}} |

|||

where <math>M(x,y)</math> is the "mistake for <math>e_7</math>", a certain homogeneous polynomial of degree 8 in the variables <math>x</math> and <math>y</math>. |

|||

An entirely analogous procedure tells us that the equations we must solve boil down to <math> \delta_{i0} \alpha_j - \alpha_{i+j} + \delta_{j0} \alpha_i = m_{ij} </math>. |

|||

Our hope is to "fix" the mistake <math>M</math> by replacing <math>e_7(x)</math> with <math>e_8(x)=e_7(x)+\epsilon(x)</math>, where <math>\epsilon_8(x)</math> is a degree 8 "correction", a homogeneous polynomial of degree 8 in <math>x</math> (well, in this simple case, just a multiple of <math>x^8</math>). |

|||

By setting <math> i=j=0 </math> in this last equation we see that <math> \alpha_0 = m_{00} </math>. Now let <math>i</math> and <math>j</math> be arbitrary ''positive'' integers. This solves for most of the coefficients: <math>\alpha_{i+j}=-m_{ij}</math>. Any integer at least two can be written as <math> i+j </math>, so this determines all of the <math> \alpha_m </math> for <math> m \ge 2 </math>. We just need to prove that <math> \alpha_m </math> is well defined, that is, that <math>\alpha_{i+j}=-m_{ij}</math> doesn't depend on <math> i </math> and <math> j </math> but only on their sum. |

|||

{{End Side Note}} |

|||

So we substitute <math>e_8(x)=e_7(x)+\epsilon(x)</math> into <math>e(x+y)-e(x)e(y)</math> (a version of {{EqRef|Main}}), expand, and consider only the low degree terms - those below and including degree 8:<sup>*1</sup> |

|||

But when <math> i </math> and <math> k </math> are strictly positive, the relation {{EqRef|m}} reads <math> m_{i+j,k} = m_{i,j+k} </math>, which show that we can "transfer" <math> j </math> from one index to the other, which is what we wanted. |

|||

{{Equation*|<math>e_8(x+y)-e_8(x)e_8(y)=M(x,y)-\epsilon(y)+\epsilon(x+y)-\epsilon(x)</math>.}} |

|||

It only remains to find <math> \alpha_1 </math> but it's easy to see this is impossible: if <math> \epsilon </math> satisfies <math> d^1\epsilon = M </math>, then so does <math> \epsilon(x)+kx </math> for any <math> k </math>, so <math> \alpha_1 </math> is arbitrary. How do our coefficient equations tell us this? |

|||

We define a "differential" <math>d:{\mathbb Q}[x]\to{\mathbb Q}[x,y]</math> by <math>(df)(x,y)=f(y)-f(x+y)+f(x)</math>, and the above equation becomes |

|||

Well, we can't find a single equation for <math> \alpha_1 </math>! We've already tried taking both <math> i </math> and <math> j </math> to be zero, and also taking them both positive. We only have taking one zero and one positive left. Doing so gives two necessary conditions for the existence of the <math> \alpha_m </math>: <math> m_{0r} = m_{r0} = 0 </math> for <math> r>0 </math>. So no <math> \alpha_1 </math> comes up, and we're still not done. Fortunately setting one of <math> i </math> and <math> k </math> to be zero and one positive in the realtion for the <math> m_{ij} </math> does the trick. |

|||

{{Equation*|<math>e_8(x+y)-e_8(x)e_8(y)=M(x,y)-(d\epsilon)(x,y)</math>.}} |

|||

==Computing the Homology, Hard but Rewarding== |

|||

{{Begin Side Note|35%}}*2 It is worth noting that in some a priori sense the existence of an exponential function, a solution of <math>e(x+y)=e(x)e(y)</math>, is quite unlikely. For <math>e</math> must be an element of the relatively small space <math> {\mathbb Q}[[x]] </math> of power series in one variable, but the equation it is required to satisfy lives in the much bigger space <math> {\mathbb Q}[[x,y]] </math>. Thus in some sense we have more equations than unknowns and a solution is unlikely. How fortunate we are! |

|||

Let <math>C_n^k</math> denote the space of degree <math>n</math> polynomials in (commuting) variables <math>x_1,\ldots,x_k</math> (with rational coefficients) and let <math>d^k:C_n^k\to C_n^{k+1}</math> be defined by <math>d^k=\sum_{i=0}^{k+1}(-)^i d^k_i</math>, where <math>(d^k_0f)(x_1,\ldots,x_{k+1}):=f(x_2,\ldots,x_{k+1})</math>, <math>(d^k_i)(x_1,\ldots,x_{k+1}):=f(x_1,\ldots,x_i+x_{i+1},\ldots,x_{k+1})</math> for <math>1\leq i\leq k</math> and <math>(d^k_{k+1}f)(x_1,\ldots,x_{k+1}):=f(x_1,\ldots,x_k)</math>. It is easy to verify that <math>{\mathcal C}_n:=(C_n^\star, d)</math> is a chain complex, and that (for <math>k=1,2,3</math>) it agrees with the degree <math>n</math> piece of the complex in {{EqRef|Complex}}. We need to show that <math>H^1({\mathcal C}_n)=0</math> for <math>n>1</math> (we don't need the vanishing of <math>H^1</math> for <math>n=0,1</math> as these degrees are covered by the initial condition {{EqRef|Init}}). This follows from the following theorem. |

|||

'''Theorem.''' <math>H^1({\mathcal C}_1)</math> is <math>{\mathbb Q}</math>; otherwise <math>H^k({\mathcal C}_n)=0</math>. |

|||

'''Proof''' (sketch). It is easy to verify "by hand" that <math>\dim H^k({\mathcal C}_1)=\delta_{k1}</math>. For <math>n>1</math> let <math>{\mathcal C}_1^{\otimes n}</math> be the <math>n</math>th interior power of <math>{\mathcal C}_1</math>, whose <math>k</math>th chain group is <math>(C_1^k)^{\otimes n}</math> and whose differential is defined using the diagonal action of the <math>d^k_i</math>'s. The permutation group <math>S_n</math> acts on <math>{\mathcal C}_1^{\otimes n}</math> by permuting the tensor factors. If <math>R</math> denotes the trivial representation of <math>S_n</math>, then <math>R\otimes_{S_n}{\mathcal C}_1^{\otimes n}={\mathcal C}_n</math>, and so |

|||

{{Equation*|<math>H^\star({\mathcal C}_n) = H^\star(R\otimes_{S_n}{\mathcal C}_1^{\otimes n}) = R\otimes_{S_n}H^\star({\mathcal C}_1^{\otimes n}) = R\otimes_{S_n}H^\star({\mathcal C}_1)^{\otimes n}</math>}} |

|||

and the results readily follows. Note that the last equality uses the Eilenberg-Zilber-Künneth formula, which holds because <math>{\mathcal C}_n</math> (and especially <math>{\mathcal C}_1</math>) is a co-simplicial space with the <math>d^k_i</math>'s as co-face maps and with <math>(s^k_i f)(x_1,\ldots,x_{k-1}):=f(x_1,\ldots,x_{i-1},0,x_i,\ldots,x_{k-1})</math> as co-degeneracies. |

|||

==Further Examples== |

|||

Let us briefly list a number of other places in mathematics where similar "non-linear algebraic functional equations" need to be solved. The techniques we have developed to solve {{EqRef|Main}} can be applied in all of those cases, though sometimes it is fully successful and sometimes something breaks down somewhere along the way. |

|||

{{Begin Side Note|35%}} In knot theory solving this equations is as easy as calculating 1+1=2 on an abacus: |

|||

[[Image:One Plus One on an Abacus.png|center|250px]] |

|||

See {{ref|Bar-Natan_Le_Thurston_03}}. |

|||

{{End Side Note}} |

{{End Side Note}} |

||

===The "Duflo Homomorphism" Equation=== |

|||

To continue with our inductive construction we need to have that <math>e_8(x+y)-e_8(x)e_8(y)=0</math>. Hence the existence of the exponential function hinges upon our ability to find an <math>\epsilon</math> for which <math>M=d\epsilon</math>. In other words, we must show that <math>M</math> is in the image of <math>d</math>. This appears hopeless unless we learn more about <math>M</math>, for the domain space of <math>d</math> is much smaller than its target space and thus <math>d</math> cannot be surjective, and if <math>M</math> was in any sense "random", we simply wouldn't be able to find our correction term <math>\epsilon</math>.<sup>*2</sup> |

|||

This is the equation |

|||

{{Equation*|<math>(\Delta\otimes 1)\Upsilon = \Upsilon^{12}\Upsilon^{23}</math>,}} |

|||

As we shall see momentarily by "finding syzygies", <math>\epsilon</math> and <math>M</math> fit within the 0th and 1st chain groups of a rather short complex |

|||

written in <math>\left(S(L^\star)_L\otimes S(L^\star)_L\otimes U(L)\right)^L</math>, for an unknown <math>\Upsilon\in \left(S(L^\star)_L\otimes U(L)\right)^L</math> where <math>L</math> is a Lie algebra. With appropriate qualifications, <math>S(L^\star)\otimes U(L)=\operatorname{Hom}(S(L),U(L))</math>, and our equation becomes the statement "<math>\Upsilon</math> is an algebra homomorphism between the invariants of <math>S(L)</math> and the invariants of <math>U(L)</math>", and its solution is the so-called "Duflo homomorphism". This is a typical "'''homomorphism wanted'''" equation and it it has many relatives, including our primary example <math>e(x+y)=e(x)e(y)</math> which is also known as the statement "the additive group of <math>{\mathbb R}</math> is isomorphic to the multiplicative group of <math>{\mathbb R}_+</math>". Note that in general, the equation for being a homomorphism, say <math>\varphi(xy)=\varphi(x)\varphi(y)</math>, is non-linear in the homomorphism <math>\varphi</math> itself. |

|||

{{Equation*|<math>\left(\epsilon\in C_1={\mathbb Q}[[x]]\right)\longrightarrow\left(M\in C_2={\mathbb Q}[[x,y]]\right)\longrightarrow\left(C_3={\mathbb Q}[[x,y,z]]\right)</math>,}} |

|||

'''Where from cometh the syzygies?''' As in the case of the exponential function, they come from the fact that for a homomorphism, associativity in the target follows from associativity in the domain. |

|||

whose first differential was already written and whose second differential is given by <math>(d^2m)(x,y,z)=m(y,z)-m(x+y,z)+m(x,y+z)-m(x,y)</math> for any <math>m\in{\mathbb Q}[[x,y]]</math>. We shall further see that for "our" <math>M</math>, we have <math>d^2M=0</math>. Therefore in order to show that <math>M</math> is in the image of <math>d^1</math>, it suffices to show that the kernel of <math>d^2</math> is equal to the image of <math>d^1</math>, or simply that <math>H^2=0</math>. |

|||

===The "Formal Quantization" Equation=== |

|||

==Finding a Syzygy== |

|||

This is the equation |

|||

{{Equation*|<math>(f\star g)\star h = f\star(g\star h),</math>}} |

|||

So what kind of relations can we get for <math>M</math>? Well, it measures how close <math>e_7</math> is to turning sums into products, so we can look for preservation of properties that both addition and multiplication have. For example, they're both commutative, so we should have <math>M(x,y)=M(y,x)</math>, and indeed this is obvious from the definition. Now let's try associativity, that is, let's compute <math>e_7(x+y+z)</math> associating first as <math>(x+y)+z</math> and then as <math>x+(y+z)</math>. In the first way we get |

|||

[[Image:The Pentagon for an Associative Product.png|right]] |

|||

<math>e_7(x+y+z)=M(x+y,z)+e_7(x+y)e_7(z)=M(x+y,z)+\left(M(x,y)+e_7(x)e_7(y)\right)e_7(z).</math> |

|||

written for an unknown "<math>\star</math>-product", within a certain complicated space of "potential products" which resembles <math>\operatorname{Hom}(V\otimes V,V)</math> or <math>V^\star\otimes V^\star\otimes V</math> for some vector space <math>V</math>. This is a typical "'''algebraic structure wanted'''" equation, in which the unknown is an "algebraic structure" (an associative product <math>\star</math>, in this case) and the equation is "the structure satisfies a law" (the associative law, in our case). Note that algebraic laws are often non-linear in the structure that they govern (the example relevant here is that the associative law is "quadratic as a function of the product"). Other examples abound, with the "structure" replaced by a "bracket" or anything else, and the "law" replaced by "Jacobi's equation" or whatever you fancy. |

|||

'''Where from cometh the syzygies?''' From the pentagon shown on the right. |

|||

In the second we get |

|||

===The Drinfel'd Pentagon Equation=== |

|||

<math>e_7(x+y+z)=M(x,y+z)+e_7(x)e_7(y+z)=M(x+y,z)+e_7(x)\left(M(y,z)+e_7(y)e_7(z)\right)</math>. |

|||

This is the equation |

|||

{{Equation*|<math>\Phi(t^{12},t^{23}) \Phi(t^{12}+t^{13},t^{24}+t^{34}) \Phi(t^{23},t^{34}) = \Phi(t^{13}+t^{23},t^{34}) \Phi(t^{12}, t^{24}+t^{34})</math>.}} |

|||

Comparing these two we get an interesting relation for <math>M</math>: <math>M(x+y,z)+M(x,y)e_7(z) = M(x,y+z) + e_7(x)M(y,z) </math>. Since we'll only use <math>M</math> to find the next highest term, we can be sloppy about all but the first term of <math>M</math>. This means that in the relation we just found we can replace <math>e_7</math> by its constant term, namely 1. Upon rearranging, we get the relation promised for <math>M</math>: <math> d^2M = M(y,z)-M(x+y,z)+M(x,y+z)-M(x,y) = 0</math>. |

|||

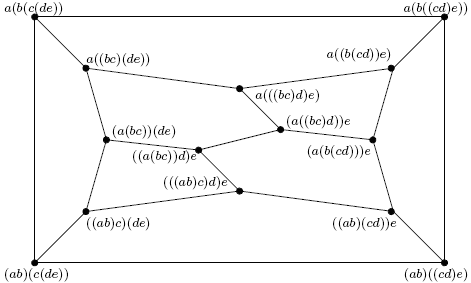

It is an equation written in some strange non-commutative algebra <math>{\mathcal A}^h_4</math>, for an unknown "function" <math>\Phi(a,b)</math> which in itself lives in some non-commutative algebra <math>{\mathcal A}^h_3</math>. This equation is related to tensor categories, to quasi-Hopf algebras and (strange as it may seem) to knot theory, and is commonly summarized by either of the following two pictures: |

|||

==Computing the Homology== |

|||

[[Image:Two Forms of the Pentagon.png|center]] |

|||

Now let's prove that <math>H^2=0</math> for our (piece of) chain complex. That is, letting <math>M(x,y) \in \mathbb{Q}[[x,y]]</math> be such that <math>d^2M=0</math>, we'll prove that for some <math>E(x) \in \mathbb{Q}[[x]]</math> we have <math> d^1E = M </math>. |

|||

This equation is a close friend of the '''Drinfel'd Hexagon Equation''', and you can read more about both of them at {{ref|Drinfeld_90}}, {{ref|Drinfeld_91}} and {{ref|Bar-Natan_97}}. These equations have many relatives living in many further exotic spaces. |

|||

Write the two power series as <math> E(x) = \sum{\frac{e_i}{i!}x^i} </math> and <math> M(x,y) = \sum{\frac{m_{ij}}{i!j!}x^i y^j} </math>, where the <math>e_i</math> are the unknowns we wish to solve for. |

|||

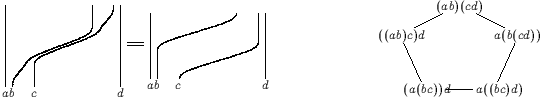

'''Where from cometh the syzygies?''' Here the equations come from the pentagon, so the syzygies must come from somewhere more complicated - the "Stasheff Polyhedron": |

|||

The coefficient of <math>x^i y^j z^k</math> in <math> M(y,z)-M(x+y,z)+M(x,y+z)-M(x,y)</math> is |

|||

[[Image:The Stasheff Polyhedron.png|center]] |

|||

<math> \frac{\delta_{i0} m_{jk}}{j!k!} - {{i+j} \choose i} \frac{m_{i+j,k}}{(i+j)!k!} + |

|||

{{j+k} \choose j} \frac{m_{i,j+k}}{i!(j+k)!} - \frac{\delta_{k0} m_{ij}}{i!j!}. </math> |

|||

===The Drinfel'd Twist Equation=== |

|||

Here, <math> \delta_{i0} </math> is a Kronecker delta: 1 if <math> i=0 </math> and 0 otherwise. Since <math> d^2 M = 0 </math>, this coefficient should be zero. Multiplying by <math> i!j!k! </math> (and noting that, for example, the first term doesn't need an <math> i! </math> since the delta is only nonzero when <math> i=0 </math>) we get: |

|||

This is the equation |

|||

{{Equation*|<math>\Phi'(x,y,z)=F^{-1}(x+y,z)F^{-1}(x,y)\Phi F(y,z)F(x,y+z)</math>.}} |

|||

<math> \delta_{i0} m_{jk} - m_{i+j,k} + m_{i,j+k} + \delta_{k0} m_{ij}=0</math>. |

|||

This equation is written in another strange non-commutative algebra <math>{\mathcal A}_3</math>, which is a superset of <math>{\mathcal A}^h_3</math>. The unknown <math>F</math> lives in some space <math>{\mathcal A}_2</math>, and <math>\Phi</math> and <math>\Phi'</math> are solutions of the Drinfel'd pentagon of before. You can read more about this equation at {{ref|Drinfeld_90}}, {{ref|Drinfeld_91}} and {{ref|Le_Murakami_96}}. This equation has many relatives living in many further exotic spaces. |

|||

An entirely analogous procedure tells us that the equations we must solve boil down to <math> \delta_{i0} e_j - e_{i+j} + \delta_{j0} e_i = m_{ij} </math>. |

|||

'''Where from cometh the syzygies?''' Again from the pentagon, but in a different way. |

|||

By setting <math> i=j=0 </math> in this last equation we see that <math> e_0 = m_{00} </math>. Now let <math>i</math> and <math>j</math> be arbitrary ''positive'' integers. This solves for most of the coefficients: <math> e_{i+j} = -m_{ij} </math>. Any integer at least two can be written as <math> i+j </math>, so this determines all of the <math> e_m </math> for <math> m \ge 2 </math>. We just need to prove that <math> e_m </math> is well defined, that is, that <math> m_{ij} </math> doesn't depend on <math> i </math> and <math> j </math> but only on their sum. |

|||

[[Image:06-1350-R4.svg|200px|right]] |

|||

But when <math> i </math> and <math> k </math> are strictly positive, the relation for the <math> m_{ij} </math> reads <math> m_{i+j,k} = m_{i,j+k} </math>, which show that we can "transfer" <math> j </math> from one index to the other, which is what we wanted. |

|||

===The Braidor Equation=== |

|||

This is the equation |

|||

{{Equation*|<math>B(x_1,x_2,x_3) B(x_1+x_3,x_2,x_4) B(x_1,x_3,x_4)</math><math>=</math><math>B(x_1+x_2,x_3,x_4) B(x_1,x_2,x_4) B(x_1+x_4,x_2,x_3)</math>,}} |

|||

written in <math>{\mathcal A}_4</math> for an unknown <math>B\in{\mathcal A}_3</math>. It is related to the picture on the right and through it to the third Reidemeister move of braid theory and knot theory. At present, the only place to read more about it is [[06-1350/Homework Assignment 4]]. |

|||

'''Where from cometh the syzygies?''' The third Reidemeister move comes from resolving a triple point. The corresponding syzygy comes from resolving a quadruple point. See a picture at [[06-1350/Homework Assignment 4]]. |

|||

It only remains to find <math> e_1 </math> but it's easy to see this is impossible: if <math> E </math> satisfies <math> d^1E = M </math>, then so does <math> E(x)+kx </math> for any <math> k </math>, so <math> e_1 </math> is abritrary. How do our coefficient equations tell us this? |

|||

===Further Further Examples=== |

|||

Well, we can't find a single equation for <math> e_1 </math>! We've already tried taking both <math> i </math> and <math> j </math> to be zero, and also taking them both positive. We only have taking one zero and one positive left. Doing so gives two neccessary conditions for the existence of the <math> e_m </math>: <math> m_{0r} = m_{r0} = 0 </math> for <math> r>0 </math>. So no <math> e_1 </math> comes up, but we're still not done. Fortunately setting one of <math> i </math> and <math> k </math> to be zero and one positive in the realtion for the <math> m_{ij} </math> does the trick. |

|||

This subsection is by definition forever empty, for if a worthwhile further further example comes to mind, mine or yours, it should be added as a subsection right above. |

|||

{{:The Existence of the Exponential Function/References}} |

{{:The Existence of the Exponential Function/References}} |

||

Latest revision as of 10:36, 28 November 2019

|

Introduction

The purpose of this paperlet is to use some homological algebra in order to prove the existence of a power series [math]\displaystyle{ e(x) }[/math] (with coefficients in [math]\displaystyle{ {\mathbb Q} }[/math]) which satisfies the non-linear equation

| [Main] |

as well as the initial condition

| [Init] |

Alternative proofs of the existence of [math]\displaystyle{ e(x) }[/math] are of course available, including the explicit formula [math]\displaystyle{ e(x)=\sum_{k=0}^\infty\frac{x^k}{k!} }[/math]. Thus the value of this paperlet is not in the result it proves but rather in the allegorical story it tells: that there is a technique to solve functional equations such as [Main] using homology. There are plenty of other examples for the use of that technique, in which the equation replacing [Main] isn't as easy (see Further Examples below). Thus the exponential function seems to be the easiest illustration of a general principle and as such it is worthy of documenting.

Thus below we will pretend not to know the exponential function and/or its relationship with the differential equation [math]\displaystyle{ e'=e }[/math].

Acknowledgment

Significant parts of this paperlet were contributed by Omar Antolin Camarena. Further thanks to Yael Karshon, to Peter Lee and to the students of Math 1350 (2006) in general.

The Scheme

We aim to construct [math]\displaystyle{ e(x) }[/math] and solve [Main] inductively, degree by degree. Equation [Init] gives [math]\displaystyle{ e(x) }[/math] in degrees 0 and 1, and the given formula for [math]\displaystyle{ e(x) }[/math] indeed solves [Main] in degrees 0 and 1. So booting the induction is no problem. Now assume we've found a degree 7 polynomial [math]\displaystyle{ e_7(x) }[/math] which solves [Main] up to and including degree 7, but at this stage of the construction, it may well fail to solve [Main] in degree 8. Thus modulo degrees 9 and up, we have

| [M] |

where [math]\displaystyle{ M(x,y) }[/math] is the "mistake for [math]\displaystyle{ e_7 }[/math]", a certain homogeneous polynomial of degree 8 in the variables [math]\displaystyle{ x }[/math] and [math]\displaystyle{ y }[/math].

Our hope is to "fix" the mistake [math]\displaystyle{ M }[/math] by replacing [math]\displaystyle{ e_7(x) }[/math] with [math]\displaystyle{ e_8(x)=e_7(x)+\epsilon(x) }[/math], where [math]\displaystyle{ \epsilon(x) }[/math] is a degree 8 "correction", a homogeneous polynomial of degree 8 in [math]\displaystyle{ x }[/math] (well, in this simple case, just a multiple of [math]\displaystyle{ x^8 }[/math]).

| *1 The terms containing no [math]\displaystyle{ \epsilon }[/math]'s make a copy of the left hand side of [M]. The terms linear in [math]\displaystyle{ \epsilon }[/math] are [math]\displaystyle{ \epsilon(x+y) }[/math], [math]\displaystyle{ -e_7(x)\epsilon(y) }[/math] and [math]\displaystyle{ -\epsilon(x)e_7(y) }[/math]. Note that since the constant term of [math]\displaystyle{ e_7 }[/math] is 1 and since we only care about degree 8, the last two terms can be replaced by [math]\displaystyle{ -\epsilon(y) }[/math] and [math]\displaystyle{ -\epsilon(x) }[/math], respectively. Finally, we don't even need to look at terms higher than linear in [math]\displaystyle{ \epsilon }[/math], for these have degree 16 or more, high in the stratosphere. |

So we substitute [math]\displaystyle{ e_8(x)=e_7(x)+\epsilon(x) }[/math] into [math]\displaystyle{ e(x+y)-e(x)e(y) }[/math] (a version of [Main]), expand, and consider only the low degree terms - those below and including degree 8:*1

We define a "differential" [math]\displaystyle{ d:{\mathbb Q}[x]\to{\mathbb Q}[x,y] }[/math] by [math]\displaystyle{ (df)(x,y)=f(y)-f(x+y)+f(x) }[/math], and the above equation becomes

| *2 It is worth noting that in some a priori sense the existence a solution of [math]\displaystyle{ e(x+y)=e(x)e(y) }[/math] is unexpected. For [math]\displaystyle{ e }[/math] must be an element of the relatively small space [math]\displaystyle{ {\mathbb Q}[[x]] }[/math] of power series in one variable, but the equation it is required to satisfy lives in the much bigger space [math]\displaystyle{ {\mathbb Q}[[x,y]] }[/math] of power series in two variables. Thus in some sense we have more equations than unknowns and a solution is unlikely. How fortunate we are that exponentials do exist, after all! |

To continue with our inductive construction we need to have that [math]\displaystyle{ e_8(x+y)-e_8(x)e_8(y)=0 }[/math]. Hence the existence of the exponential function hinges upon our ability to find an [math]\displaystyle{ \epsilon }[/math] for which [math]\displaystyle{ M=d\epsilon }[/math]. In other words, we must show that [math]\displaystyle{ M }[/math] is in the image of [math]\displaystyle{ d }[/math]. This appears hopeless unless we learn more about [math]\displaystyle{ M }[/math], for the domain space of [math]\displaystyle{ d }[/math] is much smaller than its target space and thus [math]\displaystyle{ d }[/math] cannot be surjective, and if [math]\displaystyle{ M }[/math] was in any sense "random", we simply wouldn't be able to find our correction term [math]\displaystyle{ \epsilon }[/math].*2

As we shall see momentarily by "finding syzygies", [math]\displaystyle{ \epsilon }[/math] and [math]\displaystyle{ M }[/math] fit within the 1st and 2nd chain groups of a rather short complex

| [Complex] |

whose first differential was already written and whose second differential is given by [math]\displaystyle{ (d^2m)(x,y,z)=m(y,z)-m(x+y,z)+m(x,y+z)-m(x,y) }[/math] for any [math]\displaystyle{ m\in{\mathbb Q}[[x,y]] }[/math]. We shall further see that for "our" [math]\displaystyle{ M }[/math], we have [math]\displaystyle{ d^2M=0 }[/math]. Therefore in order to show that [math]\displaystyle{ M }[/math] is in the image of [math]\displaystyle{ d^1 }[/math], it suffices to show that the kernel of [math]\displaystyle{ d^2 }[/math] is equal to the image of [math]\displaystyle{ d^1 }[/math], or simply that [math]\displaystyle{ H^2=0 }[/math].

Finding a Syzygy

So what kind of relations can we get for [math]\displaystyle{ M }[/math]? Well, it measures how close [math]\displaystyle{ e_7 }[/math] is to turning sums into products, so we can look for preservation of properties that both addition and multiplication have. For example, they're both commutative, so we should have [math]\displaystyle{ M(x,y)=M(y,x) }[/math], and indeed this is obvious from the definition. Now let's try associativity, that is, let's compute [math]\displaystyle{ e_7(x+y+z) }[/math] associating first as [math]\displaystyle{ (x+y)+z }[/math] and then as [math]\displaystyle{ x+(y+z) }[/math]. In the first way we get

In the second we get

Comparing these two we get an interesting relation for [math]\displaystyle{ M }[/math]: [math]\displaystyle{ M(x+y,z)+M(x,y)e_7(z) = M(x,y+z) + e_7(x)M(y,z) }[/math]. Since we'll only use [math]\displaystyle{ M }[/math] to find the next highest term, we can be sloppy about all but the first term of [math]\displaystyle{ M }[/math]. This means that in the relation we just found we can replace [math]\displaystyle{ e_7 }[/math] by its constant term, namely 1. Upon rearranging, we get the relation promised for [math]\displaystyle{ M }[/math]: [math]\displaystyle{ d^2M = M(y,z)-M(x+y,z)+M(x,y+z)-M(x,y) = 0 }[/math].

Computing the Homology, Easy but Limited

Now let's prove that [math]\displaystyle{ H^2=0 }[/math] for our (piece of) chain complex. That is, letting [math]\displaystyle{ M(x,y) \in \mathbb{Q}[[x,y]] }[/math] be such that [math]\displaystyle{ d^2M=0 }[/math], we'll prove that for some [math]\displaystyle{ \epsilon(x) \in \mathbb{Q}[[x]] }[/math] we have [math]\displaystyle{ d^1\epsilon = M }[/math].

Write the two power series as [math]\displaystyle{ \epsilon(x) = \sum{\frac{\alpha_i}{i!}x^i} }[/math] and [math]\displaystyle{ M(x,y) = \sum{\frac{m_{ij}}{i!j!}x^i y^j} }[/math], where the [math]\displaystyle{ \alpha_i }[/math] are the unknowns we wish to solve for.

The coefficient of [math]\displaystyle{ x^i y^j z^k }[/math] in [math]\displaystyle{ M(y,z)-M(x+y,z)+M(x,y+z)-M(x,y) }[/math] is

Here, [math]\displaystyle{ \delta_{i0} }[/math] is a Kronecker delta: 1 if [math]\displaystyle{ i=0 }[/math] and 0 otherwise. Since [math]\displaystyle{ d^2 M = 0 }[/math], this coefficient is zero. Multiplying by [math]\displaystyle{ i!j!k! }[/math] (and noting that, for example, the first term doesn't need an [math]\displaystyle{ i! }[/math] since the delta is only nonzero when [math]\displaystyle{ i=0 }[/math]) we get:

| [m] |

An entirely analogous procedure tells us that the equations we must solve boil down to [math]\displaystyle{ \delta_{i0} \alpha_j - \alpha_{i+j} + \delta_{j0} \alpha_i = m_{ij} }[/math].

By setting [math]\displaystyle{ i=j=0 }[/math] in this last equation we see that [math]\displaystyle{ \alpha_0 = m_{00} }[/math]. Now let [math]\displaystyle{ i }[/math] and [math]\displaystyle{ j }[/math] be arbitrary positive integers. This solves for most of the coefficients: [math]\displaystyle{ \alpha_{i+j}=-m_{ij} }[/math]. Any integer at least two can be written as [math]\displaystyle{ i+j }[/math], so this determines all of the [math]\displaystyle{ \alpha_m }[/math] for [math]\displaystyle{ m \ge 2 }[/math]. We just need to prove that [math]\displaystyle{ \alpha_m }[/math] is well defined, that is, that [math]\displaystyle{ \alpha_{i+j}=-m_{ij} }[/math] doesn't depend on [math]\displaystyle{ i }[/math] and [math]\displaystyle{ j }[/math] but only on their sum.

But when [math]\displaystyle{ i }[/math] and [math]\displaystyle{ k }[/math] are strictly positive, the relation [m] reads [math]\displaystyle{ m_{i+j,k} = m_{i,j+k} }[/math], which show that we can "transfer" [math]\displaystyle{ j }[/math] from one index to the other, which is what we wanted.

It only remains to find [math]\displaystyle{ \alpha_1 }[/math] but it's easy to see this is impossible: if [math]\displaystyle{ \epsilon }[/math] satisfies [math]\displaystyle{ d^1\epsilon = M }[/math], then so does [math]\displaystyle{ \epsilon(x)+kx }[/math] for any [math]\displaystyle{ k }[/math], so [math]\displaystyle{ \alpha_1 }[/math] is arbitrary. How do our coefficient equations tell us this?

Well, we can't find a single equation for [math]\displaystyle{ \alpha_1 }[/math]! We've already tried taking both [math]\displaystyle{ i }[/math] and [math]\displaystyle{ j }[/math] to be zero, and also taking them both positive. We only have taking one zero and one positive left. Doing so gives two necessary conditions for the existence of the [math]\displaystyle{ \alpha_m }[/math]: [math]\displaystyle{ m_{0r} = m_{r0} = 0 }[/math] for [math]\displaystyle{ r\gt 0 }[/math]. So no [math]\displaystyle{ \alpha_1 }[/math] comes up, and we're still not done. Fortunately setting one of [math]\displaystyle{ i }[/math] and [math]\displaystyle{ k }[/math] to be zero and one positive in the realtion for the [math]\displaystyle{ m_{ij} }[/math] does the trick.

Computing the Homology, Hard but Rewarding

Let [math]\displaystyle{ C_n^k }[/math] denote the space of degree [math]\displaystyle{ n }[/math] polynomials in (commuting) variables [math]\displaystyle{ x_1,\ldots,x_k }[/math] (with rational coefficients) and let [math]\displaystyle{ d^k:C_n^k\to C_n^{k+1} }[/math] be defined by [math]\displaystyle{ d^k=\sum_{i=0}^{k+1}(-)^i d^k_i }[/math], where [math]\displaystyle{ (d^k_0f)(x_1,\ldots,x_{k+1}):=f(x_2,\ldots,x_{k+1}) }[/math], [math]\displaystyle{ (d^k_i)(x_1,\ldots,x_{k+1}):=f(x_1,\ldots,x_i+x_{i+1},\ldots,x_{k+1}) }[/math] for [math]\displaystyle{ 1\leq i\leq k }[/math] and [math]\displaystyle{ (d^k_{k+1}f)(x_1,\ldots,x_{k+1}):=f(x_1,\ldots,x_k) }[/math]. It is easy to verify that [math]\displaystyle{ {\mathcal C}_n:=(C_n^\star, d) }[/math] is a chain complex, and that (for [math]\displaystyle{ k=1,2,3 }[/math]) it agrees with the degree [math]\displaystyle{ n }[/math] piece of the complex in [Complex]. We need to show that [math]\displaystyle{ H^1({\mathcal C}_n)=0 }[/math] for [math]\displaystyle{ n\gt 1 }[/math] (we don't need the vanishing of [math]\displaystyle{ H^1 }[/math] for [math]\displaystyle{ n=0,1 }[/math] as these degrees are covered by the initial condition [Init]). This follows from the following theorem.

Theorem. [math]\displaystyle{ H^1({\mathcal C}_1) }[/math] is [math]\displaystyle{ {\mathbb Q} }[/math]; otherwise [math]\displaystyle{ H^k({\mathcal C}_n)=0 }[/math].

Proof (sketch). It is easy to verify "by hand" that [math]\displaystyle{ \dim H^k({\mathcal C}_1)=\delta_{k1} }[/math]. For [math]\displaystyle{ n\gt 1 }[/math] let [math]\displaystyle{ {\mathcal C}_1^{\otimes n} }[/math] be the [math]\displaystyle{ n }[/math]th interior power of [math]\displaystyle{ {\mathcal C}_1 }[/math], whose [math]\displaystyle{ k }[/math]th chain group is [math]\displaystyle{ (C_1^k)^{\otimes n} }[/math] and whose differential is defined using the diagonal action of the [math]\displaystyle{ d^k_i }[/math]'s. The permutation group [math]\displaystyle{ S_n }[/math] acts on [math]\displaystyle{ {\mathcal C}_1^{\otimes n} }[/math] by permuting the tensor factors. If [math]\displaystyle{ R }[/math] denotes the trivial representation of [math]\displaystyle{ S_n }[/math], then [math]\displaystyle{ R\otimes_{S_n}{\mathcal C}_1^{\otimes n}={\mathcal C}_n }[/math], and so

and the results readily follows. Note that the last equality uses the Eilenberg-Zilber-Künneth formula, which holds because [math]\displaystyle{ {\mathcal C}_n }[/math] (and especially [math]\displaystyle{ {\mathcal C}_1 }[/math]) is a co-simplicial space with the [math]\displaystyle{ d^k_i }[/math]'s as co-face maps and with [math]\displaystyle{ (s^k_i f)(x_1,\ldots,x_{k-1}):=f(x_1,\ldots,x_{i-1},0,x_i,\ldots,x_{k-1}) }[/math] as co-degeneracies.

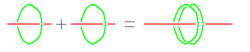

Further Examples

Let us briefly list a number of other places in mathematics where similar "non-linear algebraic functional equations" need to be solved. The techniques we have developed to solve [Main] can be applied in all of those cases, though sometimes it is fully successful and sometimes something breaks down somewhere along the way.

| In knot theory solving this equations is as easy as calculating 1+1=2 on an abacus:

See [Bar-Natan_Le_Thurston_03]. |

The "Duflo Homomorphism" Equation

This is the equation

written in [math]\displaystyle{ \left(S(L^\star)_L\otimes S(L^\star)_L\otimes U(L)\right)^L }[/math], for an unknown [math]\displaystyle{ \Upsilon\in \left(S(L^\star)_L\otimes U(L)\right)^L }[/math] where [math]\displaystyle{ L }[/math] is a Lie algebra. With appropriate qualifications, [math]\displaystyle{ S(L^\star)\otimes U(L)=\operatorname{Hom}(S(L),U(L)) }[/math], and our equation becomes the statement "[math]\displaystyle{ \Upsilon }[/math] is an algebra homomorphism between the invariants of [math]\displaystyle{ S(L) }[/math] and the invariants of [math]\displaystyle{ U(L) }[/math]", and its solution is the so-called "Duflo homomorphism". This is a typical "homomorphism wanted" equation and it it has many relatives, including our primary example [math]\displaystyle{ e(x+y)=e(x)e(y) }[/math] which is also known as the statement "the additive group of [math]\displaystyle{ {\mathbb R} }[/math] is isomorphic to the multiplicative group of [math]\displaystyle{ {\mathbb R}_+ }[/math]". Note that in general, the equation for being a homomorphism, say [math]\displaystyle{ \varphi(xy)=\varphi(x)\varphi(y) }[/math], is non-linear in the homomorphism [math]\displaystyle{ \varphi }[/math] itself.

Where from cometh the syzygies? As in the case of the exponential function, they come from the fact that for a homomorphism, associativity in the target follows from associativity in the domain.

The "Formal Quantization" Equation

This is the equation

written for an unknown "[math]\displaystyle{ \star }[/math]-product", within a certain complicated space of "potential products" which resembles [math]\displaystyle{ \operatorname{Hom}(V\otimes V,V) }[/math] or [math]\displaystyle{ V^\star\otimes V^\star\otimes V }[/math] for some vector space [math]\displaystyle{ V }[/math]. This is a typical "algebraic structure wanted" equation, in which the unknown is an "algebraic structure" (an associative product [math]\displaystyle{ \star }[/math], in this case) and the equation is "the structure satisfies a law" (the associative law, in our case). Note that algebraic laws are often non-linear in the structure that they govern (the example relevant here is that the associative law is "quadratic as a function of the product"). Other examples abound, with the "structure" replaced by a "bracket" or anything else, and the "law" replaced by "Jacobi's equation" or whatever you fancy.

Where from cometh the syzygies? From the pentagon shown on the right.

The Drinfel'd Pentagon Equation

This is the equation

It is an equation written in some strange non-commutative algebra [math]\displaystyle{ {\mathcal A}^h_4 }[/math], for an unknown "function" [math]\displaystyle{ \Phi(a,b) }[/math] which in itself lives in some non-commutative algebra [math]\displaystyle{ {\mathcal A}^h_3 }[/math]. This equation is related to tensor categories, to quasi-Hopf algebras and (strange as it may seem) to knot theory, and is commonly summarized by either of the following two pictures:

This equation is a close friend of the Drinfel'd Hexagon Equation, and you can read more about both of them at [Drinfeld_90], [Drinfeld_91] and [Bar-Natan_97]. These equations have many relatives living in many further exotic spaces.

Where from cometh the syzygies? Here the equations come from the pentagon, so the syzygies must come from somewhere more complicated - the "Stasheff Polyhedron":

The Drinfel'd Twist Equation

This is the equation

This equation is written in another strange non-commutative algebra [math]\displaystyle{ {\mathcal A}_3 }[/math], which is a superset of [math]\displaystyle{ {\mathcal A}^h_3 }[/math]. The unknown [math]\displaystyle{ F }[/math] lives in some space [math]\displaystyle{ {\mathcal A}_2 }[/math], and [math]\displaystyle{ \Phi }[/math] and [math]\displaystyle{ \Phi' }[/math] are solutions of the Drinfel'd pentagon of before. You can read more about this equation at [Drinfeld_90], [Drinfeld_91] and [Le_Murakami_96]. This equation has many relatives living in many further exotic spaces.

Where from cometh the syzygies? Again from the pentagon, but in a different way.

The Braidor Equation

This is the equation

written in [math]\displaystyle{ {\mathcal A}_4 }[/math] for an unknown [math]\displaystyle{ B\in{\mathcal A}_3 }[/math]. It is related to the picture on the right and through it to the third Reidemeister move of braid theory and knot theory. At present, the only place to read more about it is 06-1350/Homework Assignment 4.

Where from cometh the syzygies? The third Reidemeister move comes from resolving a triple point. The corresponding syzygy comes from resolving a quadruple point. See a picture at 06-1350/Homework Assignment 4.

Further Further Examples

This subsection is by definition forever empty, for if a worthwhile further further example comes to mind, mine or yours, it should be added as a subsection right above.

References

[Bar-Natan_97] ^ D. Bar-Natan, Non-associative tangles, in Geometric topology (proceedings of the Georgia international topology conference), (W. H. Kazez, ed.), 139-183, Amer. Math. Soc. and International Press, Providence, 1997.

[Bar-Natan_Le_Thurston_03] ^ D. Bar-Natan, T. Q. T. Le and D. P. Thurston, Two applications of elementary knot theory to Lie algebras and Vassiliev invariants, Geometry and Topology 7-1 (2003) 1-31, arXiv:math.QA/0204311.

[Drinfeld_90] ^ V. G. Drinfel'd, Quasi-Hopf algebras, Leningrad Math. J. 1 (1990) 1419-1457.

[Drinfeld_91] ^ V. G. Drinfel'd, On quasitriangular Quasi-Hopf algebras and a group closely connected with [math]\displaystyle{ \operatorname{Gal}(\bar{\mathbb Q}/{\mathbb Q}) }[/math], Leningrad Math. J. 2 (1991) 829-860.

[Le_Murakami_96] ^ T. Q. T. Le and J. Murakami, The universal Vassiliev-Kontsevich invariant for framed oriented links, Compositio Math. 102 (1996), 41-64, arXiv:hep-th/9401016.